Most optical character recognition(OCR) software first aligns the image properly before detecting the text in it. Here I’ll show you how to do that with OpenCV:

First we need to import opencv:

import cv2

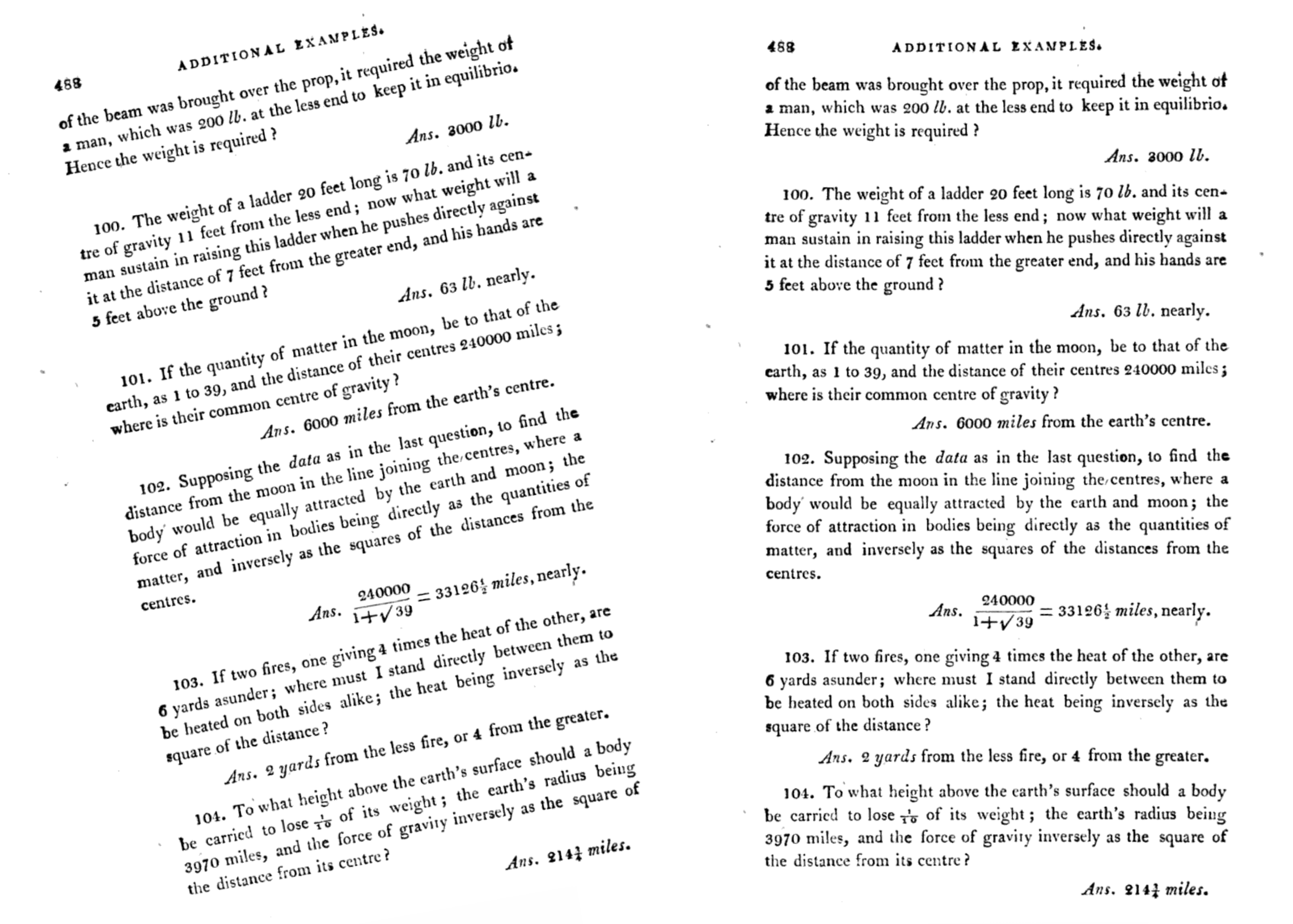

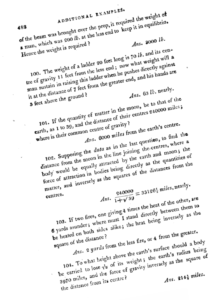

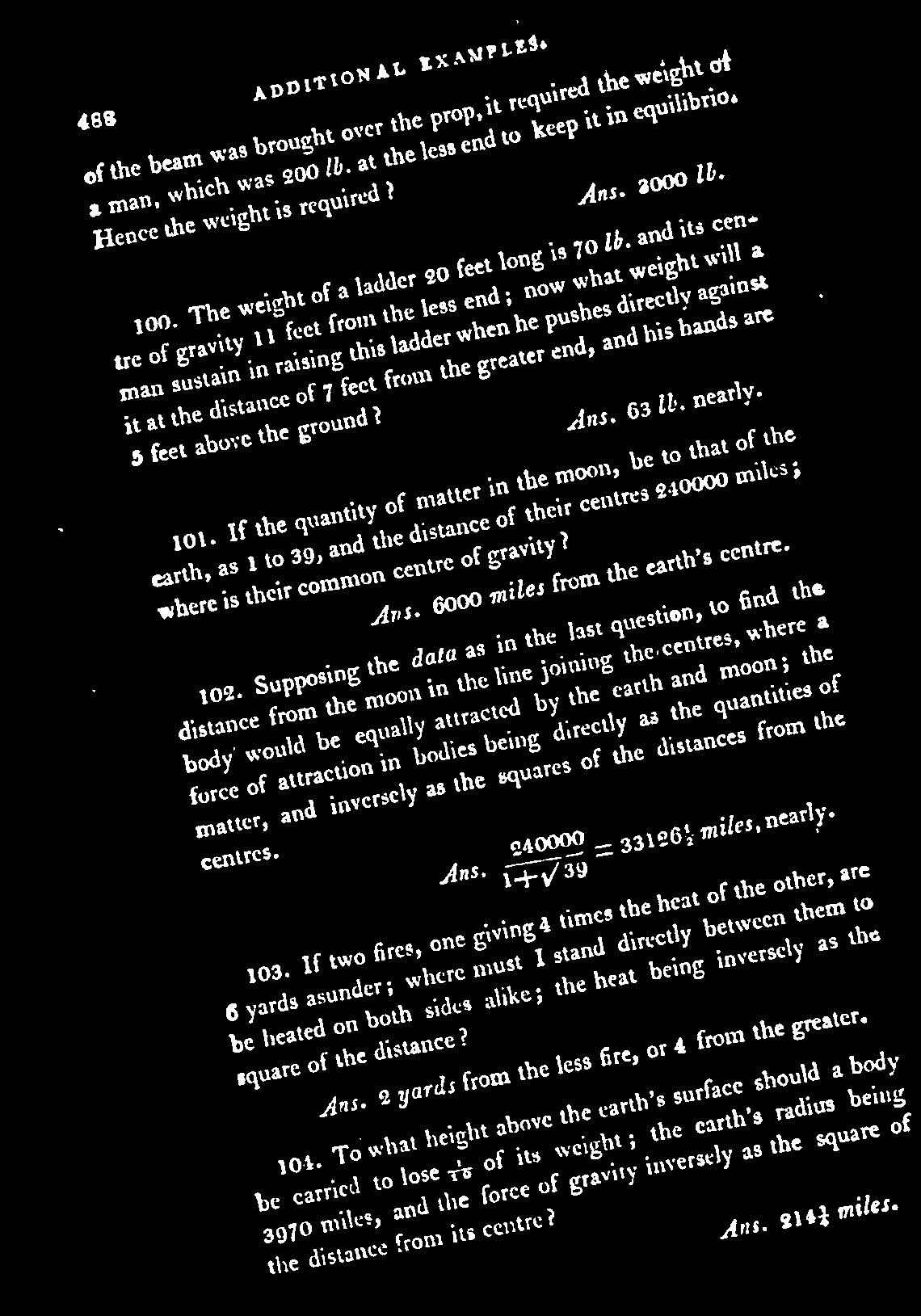

Let’s read an image in. You probably will have a color image, so first we need to convert it to gray scale (one channel only).

image = cv2.imread("text_rotated.png")

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

We’re interested in the text itself and nothing else, so we’re going to create an image that represents this. Non-zero pixels represent what we want, and zero pixels represent background. To do that, we will threshold the grayscale image, so that only the text has non-zero values. The explanation of the inputs is simple: gray is the grayscale image used as input, the next argument is the threshold value, set to 0, although it is ignored since it will be calculated by the Otsu algorithm(it’s 170 in this case) because we’re using THRESH_OTSU flag. The next argument is 255 which is the value to set the pixels that pass the threshold, and finally the other flag indicates that we want to use a binary output (all or nothing), and that we want the output reversed (since the text is black in the original). The returned values are the actual value of the threshold to be used as calculated by the Otsu algorithm(otsu_thresh), and the thresholded image itself (thresh).

otsu_thresh, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)

First we need to remove those extra white pixels outside of the area of the text. We can do that with the morphological operator Open, which basically erodes the image (makes the white areas smaller), and then dilates it back (make the white areas larger again). By doing that any small dots, or noise in the image, will be removed. The larger the kernel size, the more noise will be removed. You can do this either by hand, or with an iterative method that checks, for example, the ratio of non-zero to zero pixels for each value, and select the one that maximizes that metric.

kernel_size = 4

ksize=(kernel_size, kernel_size)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, ksize)

thresh_filtered = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel)

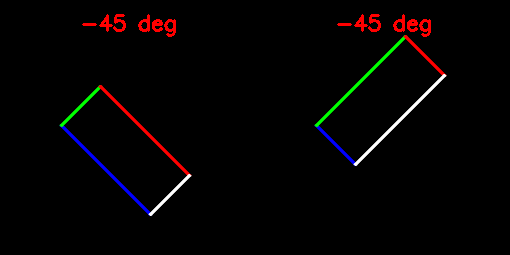

Now that we have an image that represents the text, we’re interested in knowing the angle in which it is rotated. There are a few different ways of doing this. Since OpenCV has a convenient function that gives you the minimum rectangle that contains all non-zero values, we could use that. Let’s see how it works:

nonZeroCoordinates = cv2.findNonZero(thresh_filtered)

imageCopy = image.copy()

for pt in nonZeroCoordinates:

imageCopy = cv2.circle(imageCopy, (pt[0][0], pt[0][1]), 1, (255, 0, 0))

We can now use those coordinates and ask OpenCV to give us the minimum rectangle that contains them:

box = cv2.minAreaRect(nonZeroCoordinates)

boxPts = cv2.boxPoints(box)

for i in range(4):

pt1 = (boxPts[i][0], boxPts[i][1])

pt2 = (boxPts[(i+1)%4][0], boxPts[(i+1)%4][1])

cv2.line(imageCopy, pt1, pt2, (0,255,0), 2, cv2.LINE_AA);

The estimated angle can then be simply retrieved from the returned rectangle. Note: Remember to double check the returned angle, as it might be different to what you’re expecting. The function minAreaRect always returns angles between 0 and -90 degrees.

angle = box[2]

if(angle < -45):

angle = 90 + angle

Once you have the estimated angle, it’s time to rotate the image back. First we calculate a rotation matrix based on the angle, and then we apply it. The rotation angle is expressed in degrees, and positive values mean a counter-clockwise rotation.

h, w, c = image.shape

scale = 1.

center = (w/2., h/2.)

M = cv2.getRotationMatrix2D(center, angle, scale)

rotated = image.copy()

cv2.warpAffine(image, M, (w, h), rotated, cv2.INTER_CUBIC, cv2.BORDER_REPLICATE )

And that’s it. You now have an aligned image, ready to be parsed for OCR, or any other application.

THANK YOU!